Analyzing Entity Framework Performance

Entity Framework has a number of ways to optimize your queries. In this post, I explore a number of ways to make your queries a little bit faster.

When Entity Framework was introduced, it helped developers retrieve data and provide easy methods for managing that data with their relationships.

One of the things I love about Entity Framework is the ability to expand on the framework and to construct alternative ways of retrieving data. As they say, there's more than one way to...umm...cook an egg (no skinning of cats here).

For example, over the past two weeks, I worked on a project where I was pulling data from multiple tables (>3).

Since I was working with an EF 5.0 project (soon to be pushed to 6.0 LTS), I read about how the EF team introduced Eager Loading of Related Data using the .Include() method. So I started implementing it.

However, after working with it for a while, I noticed the query was really slow. I'm talking 10-20 seconds slow for a relatively small table.

Yeah. Oh yeah, we need to fix this.

Of course, I won't be able to show the exact example, but we can look at an existing database for this post.

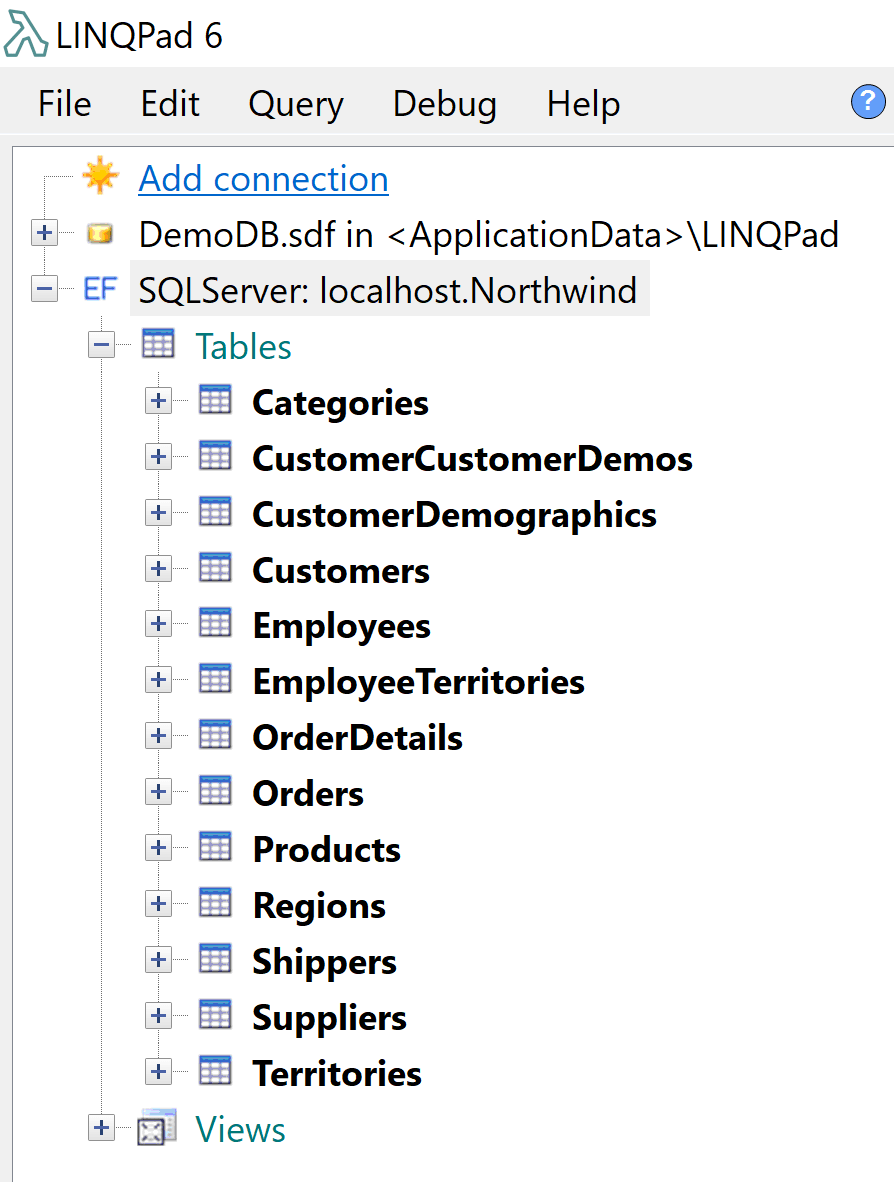

So let's fire up LINQPad with a Northwind database instance.

Since we have everything connected, let's run some tests.

Baseline Code

Let's say we have a user who's looking for a customer who ordered from a particular category.

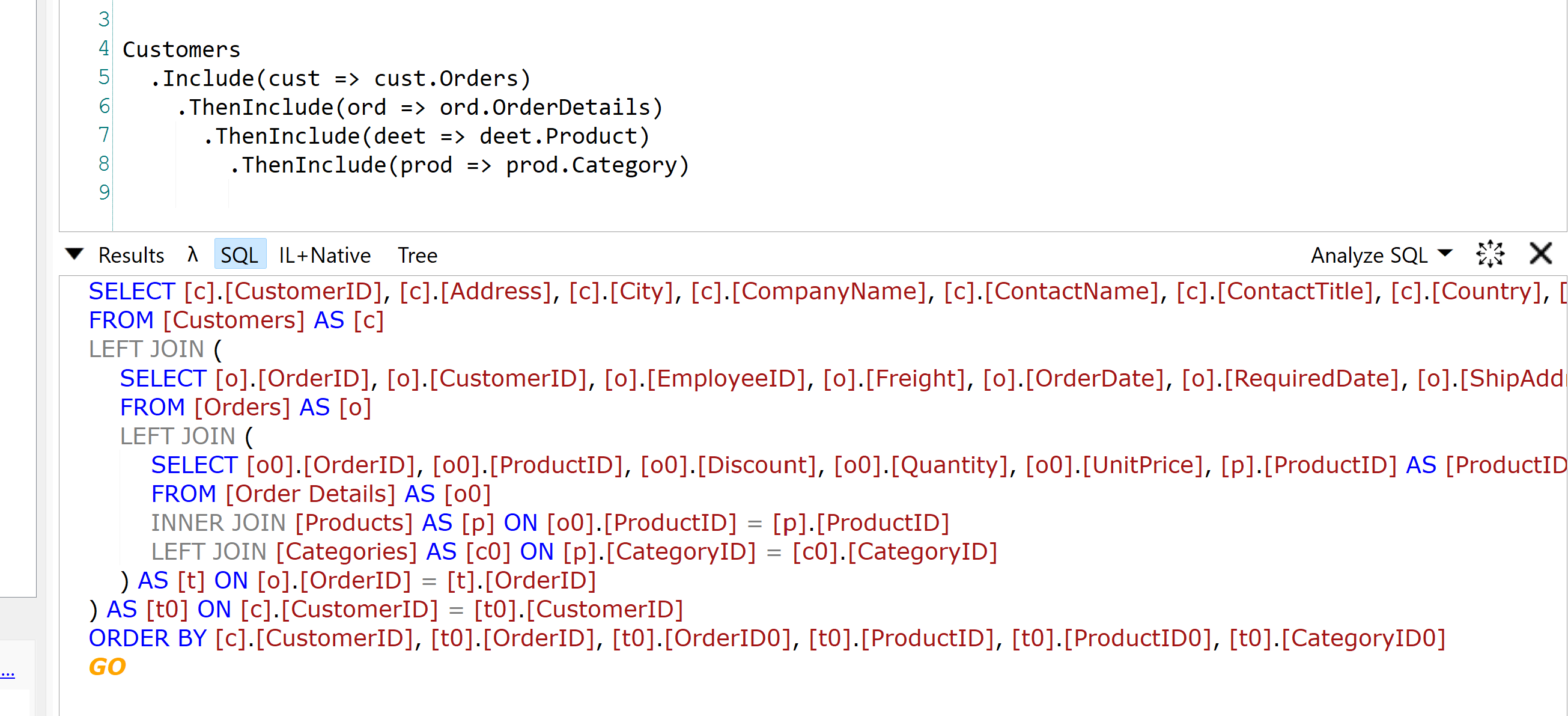

For our first attempt, the code looks like this:

Customers

.Include(cust => cust.Orders)

.ThenInclude(ord => ord.OrderDetails)

.ThenInclude(deet => deet.Product)

.ThenInclude(prod => prod.Category)

As you can see, there are a number of tables we need to touch to find our category: Customers, Orders, OrderDetails, Products, and Categories. This particular query takes 1-3 seconds (dependent on caching).

Thanks to LINQPad, here's the SQL for the LINQ query.

While the SQL is a little convoluted, the LINQ query builds a SQL statement to make one call to return all of the data.

Here are some tips on how to minimize the amount of .Include()/.ThenInclude() calls in your LINQ and maximize performance.

Less is More

Being Mr. Obvious, I'll state what we're all thinking: Don't use so many .Include()/.ThenInclude() statements. Sorry, it had to be said.

When you follow this "relationship-breadcrumb trail" (I'm working on a better word for this), you start to add an additional number of records to the results of your query.

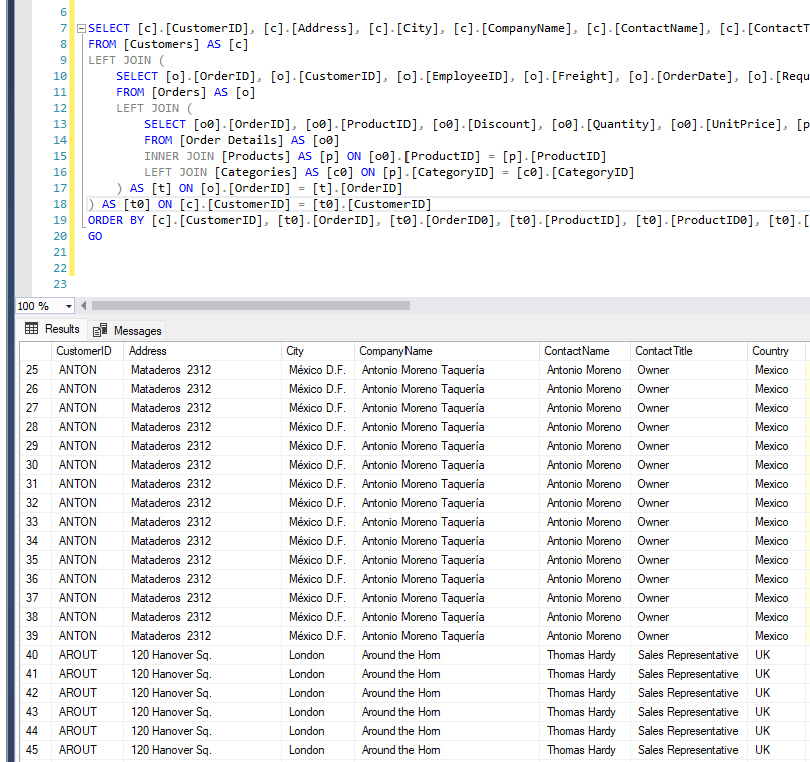

Even though there's only 91 records in our Customer table and we're joining on four other tables, the SQL generated by LINQ returns 2,157 records.

And this is a small database.

Adding a large number of .Include()/.ThenInclude() methods may be great for initial testing, but a refactoring may be recommended...nay nay...required to achieve better performance out of your LINQ query.

As a general guideline, the less joins you perform, the faster the query.

Indexing on Foreign Keys

While this isn't the case here with Northwind, I've seen tables created without indexes where people would query the data and complain about SQL Server is not performant at all and is a slug. Adding an index to a lookup field supercharges the lookup.

Identify which fields you're querying and either create an index in the table itself (using SSMS or Azure Data Studio) or in the EF model using Index attributes.

This may speed up your EF performance.

Async/Await Operations

Using Async/Await in most of your DbContext calls assures you that when a LINQ query is executed, it doesn't wait for the data to return until the process is finished.

These small code adjustments provide large returns when used in large systems. It's an important factor when optimizing and refactoring your code for performance.

Split Out Small Lookup Tables

If you have a small and simple table containing less than 20 records, it may make sense to load it separately and attach the entities manually.

For example, we could eliminate the .Include() for the Category, load all Category records into memory, and loop through each Product and assign the category to the Category Navigation property.

var categoryList = Categories; // in LINQPad, this loads the table.

var customers = Customers

.Include(cust => cust.Orders)

.ThenInclude(ord => ord.OrderDetails)

.ThenInclude(deet => deet.Product);

foreach (var customer in customers)

{

var orders = customer.Orders;

foreach (var order in orders)

{

var details = order.OrderDetails;

foreach (var detail in details)

{

detail.Product.Category = categoryList

.FirstOrDefault(e =>

e.CategoryID==detail.Product.CategoryID);

}

}

}

customers.Dump();

It eliminates an .Include(), but adds additional code.

Honestly, if I eliminate a join and use memory instead of hitting the database, I'd call it a win.

Use .AsNoTracking()

I know I've mentioned this before when creating performance-based LINQ queries, but it definitely bears repeating.

When you attach the .AsNoTracking() method to your LINQ query, this signals EF to ignore the EntityState/ChangeState when loading the entities from the tables.

This eliminates an extra step in the ORM process.

So while our code adds an extra method call, our SQL statement query stays the same and, unfortunately, still takes time to load, but is still a bit faster transferring the data into entities.

Customers

.AsNoTracking()

.Include(cust => cust.Orders)

.ThenInclude(ord => ord.OrderDetails)

.ThenInclude(deet => deet.Product)

.ThenInclude(prod => prod.Category)

If you are loading data where you create, update, or remove entities in a table, make sure you do not have the .AsNoTracking() method in your LINQ call. Use it only when you need to view your data.

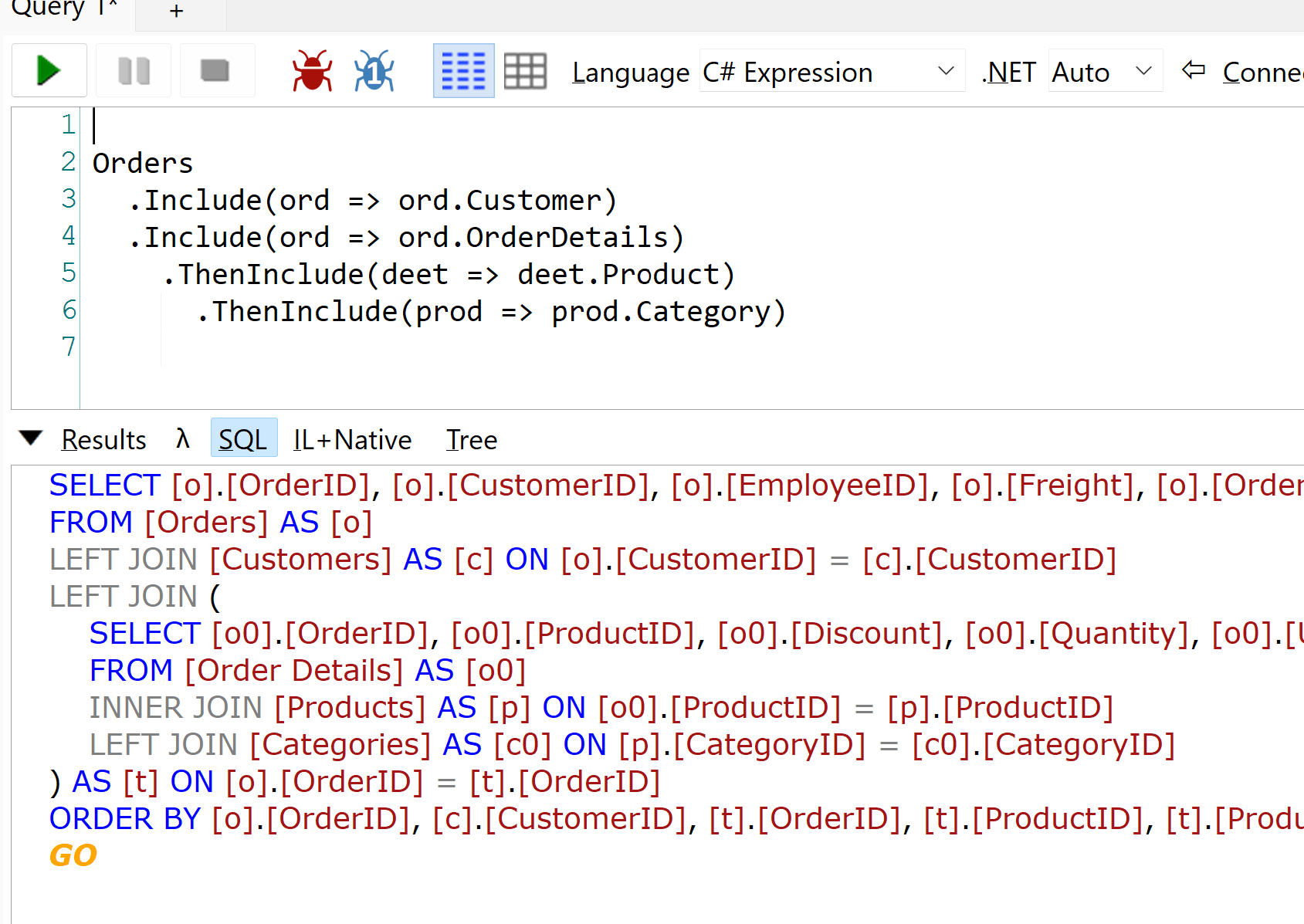

Start From a Different Location

What I mean by this is try starting with a different table.

In our example, we start with Customers as the starting table. Instead of Customers, we could start with Orders and perform the joins from that point on.

Orders .Include(ord => ord.Customer) .Include(ord => ord.OrderDetails) .ThenInclude(deet => deet.Product)

.ThenInclude(prod => prod.Category)

Notice how we have two .Include()'s instead of one.

While this query is a little more efficient and practical, I understand it may not fit your needs.

It pays to look at the LINQ query from different perspectives. You may be able to achieve the same result with a different approach.

Divide and Conquer

Finally, this is relatively new to me. I didn't know this existed and it's what I used to achieve the speed for my problematic query.

Remember at the beginning when I said LINQ creates one big SQL statement to execute with inner and left joins everywhere?

There is an easier way to create faster queries by taking the LINQ statement and breaking it up into smaller pieces.

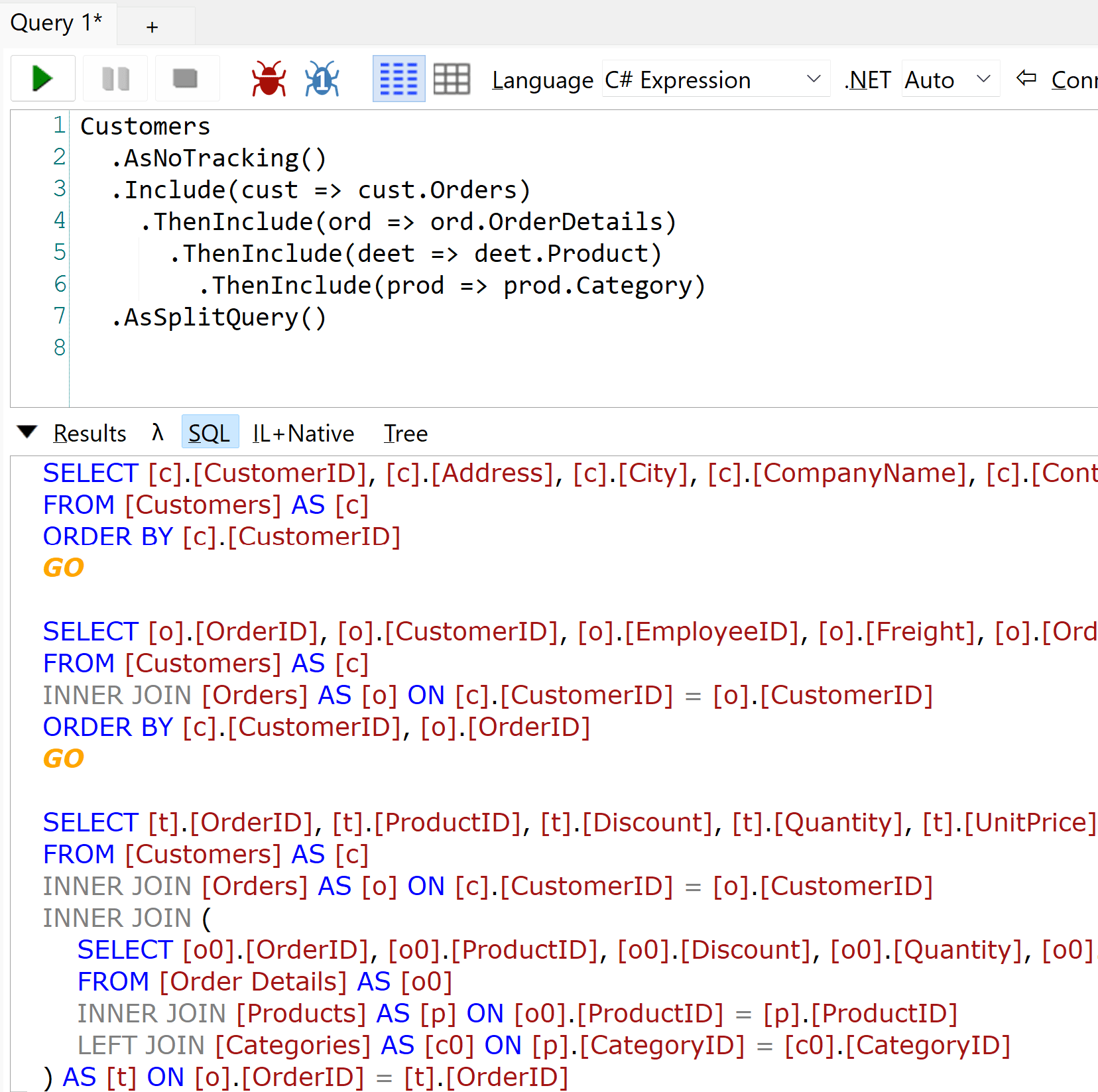

As you can see in our example, when I add the .AsSplitQuery() to the end of my LINQ statement, I get the following SQL statement(s):

Customers

.AsNoTracking()

.Include(cust => cust.Orders)

.ThenInclude(ord => ord.OrderDetails)

.ThenInclude(deet => deet.Product)

.ThenInclude(prod => prod.Category)

.AsSplitQuery()

Sending a group of SQL statements to return back multiple result sets makes sense, but if you look at the SQL statements a little closer, you'll notice the same customers SQL statement is being executed a second time with a join.

Hmm...that's strange.

Ok, here's my theory why this query is so fast.

- The first query of Customers is executed and becomes cached because it's a simple SELECT statement. No execution time at all, but becomes cached.

- The second query of Orders is also executed and joins with the customer table making this second query cached as well.

- With those two queries cached in memory, it then takes the entire query and runs it against the Customers table and Orders table...which are cached. The only two tables requiring a lookup are the Products and Category tables.

This caching strategy is beneficial in two ways:

- PERFORMANCE! The immediate gain of cached data at a data level

- It makes the SQL a little less convoluted, therefore not giving DBAs a stroke when EF sends SQL statements to the server (Hey, I said LESS convoluted, not clean) ;-)

When I tested this approach, I was sold this was the way to move forward and I immediately saw an improvement in performance.

Conclusion

In this post, we covered a number of ways to optimize an EF query where it's maintainable and easy to tweak to gain the performance in your own projects.

I must commend the EF Team and how much they've put into making this such a flexible framework. There are so many ways to achieve the same result.

How would you approach the query above? Do you have a troublesome query? Post your comments below and let's discuss more EF approaches.