Harnessing .NET and C# for Better SEO: How It Can Boost Your Website Rankings

With the latest .NET and C#, it's now easier to apply technical SEO to a website, provide quality content, and analyze search traffic

Notice: This post is part of the Eighth Annual C# Advent Calendar for 2024. Check the C# Advent for more than 50 other great articles about C# created by the community. Thanks a lot to Matt Groves (@mgroves) for putting this together again! Great job, Matt!

Recently, I was asked to present on the topic of SEO to a C# user's group (and thank you Sam Nasir for the opportunity). While ASP.NET has been (and always will be) my bread and butter, I feel using SEO on websites should be a secondary skill if developers are always building websites.

So, in preparing for the presentation, I wanted to integrate as much ASP.NET, C#, and SEO as possible. During the presentation, I mentioned several SEO and .NET techniques used in helping a public website gain more visibility through search engine/website best practices.

Source: YouTube

In this post, we'll focus specifically on technical SEO which optimizes a website through server-side techniques to optimize its ranking and positioning in search engines and briefly touch on the 'M' word (marketing). In this case, by using C# and .NET.

I briefly touched on certain topics in the presentation, but feel like they fell short because, of course, developers like to see code. This post is meant to go a bit deeper by providing a couple coding examples (mainly C# tips). The topics focus on the following:

- Keyword Density

- Minifying HTML

- Keyword Research

Now, let's see how C# can boost your website's SEO.

Keyword Density

With the latest release of .NET 9.0, I was excited to see the CountBy/AggregateBy methods included.

Why?

Search engines analyze content and provide the best choice based on the topic's relevancy. One way search engines identify relevancy is to examine the number of keywords in a web page and how they relate to a topic.

The new CountBy and AggregateBy methods return the count of elements in a list grouped by a key. In SEO terms, it allows us to identify the most prevalent keywords in a particular string or array.

If given some text, we can identify the most frequent words used in the post. Let's look at a simple example.

KeywordResults = TextToAnalyze

.Split([' ', '.', ','], StringSplitOptions.RemoveEmptyEntries)

.Select(e=> e.ToLowerInvariant())

.Where(e=> !_stopWords.Contains(e))

.CountBy(e => e)

.OrderByDescending(e => e.Value)

.Where(e=> e.Value >= 2)

.ToList();

Before counting the words, the text is split delimited by spaces, periods, and commas. Once the list is split, each word is converted to lowercase.

Next, all filler words are removed so the CountBy method focuses on only topical words meaning something. The _stopWords list contains words to be ignored and meant to be removed.

private string[] _stopWords =

[

"a", "an", "on", "of", "or", "as", "i", "in", "is", "to",

"the", "and", "for", "with", "not", "by", "was", "it"

];

The CountBy method is called to count all words and their frequency. The result produces a Dictionary<string,int> where the Key is the word and the Value is the number of times it's used in the text.

Once the words are counted, the list is sorted by the Value in descending order and only where there are two or more occurrences.

Of course, additional punctuation and stop words can be added for more accurate results.

For the best results, use one or two keywords for every 200 words or 2-4% of the text should contain targeted keywords.

Based on the Google Patent regarding links, this fundamental concept can be expanded further to achieve better search results with context chunking. In the patent, Google looks at the link, and then examines the words to the left AND right of the link to determine the context and value of the link.

As mentioned in the video above, search engines look at a number of factors when it comes to links. It's best to provide as much information as possible so search engines can serve relevant pages to drive traffic to a site...specifically to YOUR website.

Minifying HTML

The performance of a website is extremely important and is one of the factors in determining a website's ranking in search engines.

As mentioned in "ASP.NET 8 Best Practices," next to security, performance is one of the most important parts of a successful website.

While GZip compression is another technology to implement, the ability to minify your HTML shrinks the payload even further. In earlier versions of .NET, this concept was achieved through ActionFilters for MVC.

With the Middleware pipeline, we can take advantage of updating a webpage by minifying it on the return trip to the client/browser.

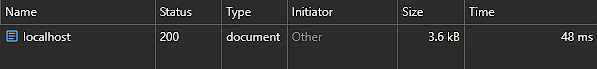

If we look at the Index.cshtml page without minifying the HTML, we can see it's at 3.6K.

With a Middleware approach, we can use Regular Expressions (aka black voodoo magic) to remove the spaces, carriage returns, and linefeeds from the HTML.

Middleware\MinifyHtmlMiddleware.cs

using System.Text.RegularExpressions;

namespace SEOWithCSharp.Middleware;

public class MinifyHtmlMiddleware(RequestDelegate next, ILoggerFactory loggerFactory)

{

public async Task InvokeAsync(HttpContext context)

{

var stream = context.Response.Body;

try

{

using var buffer = new MemoryStream();

context.Response.Body = buffer;

await next(context);

var isHtml = context.Response.ContentType?.ToLower().Contains("text/html");

if (!isHtml.GetValueOrDefault())

{

return;

}

context.Response.Body = stream;

buffer.Seek(0, SeekOrigin.Begin);

var content = await new StreamReader(buffer).ReadToEndAsync();

if (context.Response.StatusCode == 200 && isHtml.GetValueOrDefault())

{

content = Regex.Replace(

content,

@"(?<=\s)\s+(?![^<>]*</pre>)",

string.Empty, RegexOptions.Compiled)

.Replace("\r", string.Empty)

.Replace("\n", string.Empty);

}

await context.Response.WriteAsync(content);

}

finally

{

context.Response.Body = stream;

}

}

}

Middleware\MinifyHtmlExtensions.cs

namespace SEOWithCSharp.Middleware;

public static class MinifyHtmlExtensions

{

public static IApplicationBuilder UseMinifyHtml(

this IApplicationBuilder builder) =>

builder.UseMiddleware<MinifyHtmlMiddleware>();

}

To use the Middleware, add this line to the Program.cs file.

app.UseMinifyHtml();

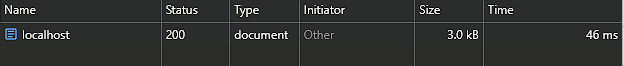

If we load the webpage now, we can see the results of our efforts.

The webpage is minified to a low 3K, a difference of 16%.

Of course, everyone's mileage may vary. If returning a heavy HTML webpage, the benefits should be apparent.

While most webpages achieve a 6-16% decrease in file size by minifying HTML (which in turn, increases overall performance), most developers feel content with leaving GZip compression in place without minifying.

Personally, I feel using both GZip compression AND minifying HTML would pack more of a punch for a website's performance.

Sidenote: With .NET 9.0, I ran into one issue with the new app.MapStaticAssets() endpoint extension method. With the new approach of bundling/minifying assets on-the-fly, it caused issues which requires additional time for discovery to determine what's happening behind the scenes. I've tried to dynamically build/create/bundle images in the past by dynamically resized images, but takes too long when requesting a page full of images. It's best to create a process for optimizing client-side assets using a Task Runner at build time. With that said, I reverted back to the app.UseStaticFiles() and the HTML minifies as expected.

Keyword Research

How do you know what to write about on a technical blog?

As Wayne Gretzky said, "Skate to where the puck is going to be, not where it has been." For example, since .NET 9.0 was recently released, it makes sense to write about all of the latest features since developers want to know about how to use it.

.NET has a large ecosystem and can seem overwhelming...so what's a popular topic to write about?

There are four ways to identify what keywords are popular:

- Use a third-party dedicated to SEO (Ahrefs.com, SEMRush, MajesticSEO, etc.). For additional tools, search for "SEO tools."

- Use Google Keyword Planner for volume, popularity, and average ad cost.

- Go old school with a search bar's Autosuggest/"People also search for" at the bottom of a search page. Usually, these are the most popular search terms people are typing into search engines.

- Use C# to analyze and data mine keywords.

"How can we use C# to do keyword research?"

There is a reasonably-priced service called DataForSEO.com which provides a REST API for keyword data, search engine result pages, and content analysis just to name a few of the services.

As a way to test drive the services, I was given $1 (yes, one dollar) for the playground and I've yet to use it all.

Once you sign up and receive your API key, these services are a simple C# call to perform SEO-related services. If you need an example of what APIs are available, they have an API Playground as well. The playground also creates a JSON result so you can easily create a quick C# model hierarchy using the Visual Studio 2022's "Paste JSON as classes" feature.

Conclusion

These techniques are but a few of the ways to incorporate modern website techniques by utilizing their C# skills to boost website performance, gain more visibility to their site, and write more relevant content for readers.

- Identify primary keywords to be used in a blog post (using Keyword Research)

- Write a post containing those keywords and sprinkle them throughout the article to provide relevancy and context to the search engines (using Keyword Density)

- Apply Technical SEO concepts to the site making it faster (like MinifyHtmlMiddleware)

Web developers should have a general understanding of basic marketing (yes, I said the 'M' word) of a website by applying Technical SEO techniques at the very least.

Check out the Github repository below.

Did you find this post interesting? Want more Technical SEO content with C#? Post your comments below and let's discuss.