Review: DogFoodCon 2019

Our guest blogger, Andrew Hinkle, attended DogFoodCon 2019 and summarizes all of the exceptional sessions he attended.

DogFoodCon was a resounding success! This year I hit some sessions on the Microsoft stack that I'm accustomed to attending. I decided to branch out to learn more Agile tips. I also listened in on some tech trees I'm green on such as WebAssembly, NoSQL, and Git. I focused on my main stack this time around, but it's great to spread your wings and build up your soft skills as well. DogFoodCon has something for everyone, so if you missed it this year I hope the following session reviews will give you a taste of what to expect. I hope to see you there next year! Presentation slideshows are available in the DogFoodCon OneDrive.

DogFoodCon is growing

DogFoodCon's two day event had an estimated 460 – 470 total attendees this year, mostly from the Great Lakes Region (OH, KY, WV, IN, MI, and Western PA). That's the largest attendance to date! DogFoodCon has been increasing in size over the years. Here are the verified registered attendees. 2014: 384; 2015: 385; 2016: 400; 2017: 394; 2018: 403; 2019: 431. There is always some variance for volunteers, speakers, booth workers, and such hence the estimation.

Fusion Alliance was gracious enough to host the Pre-Conference Security Day on Wednesday and the Azure Fundamentals 900 session on Thursday. It's amazing all that DogFoodCon offers!

Due to conflicts the Azure Fundamentals AZ-900 session (no exam, no voucher) originally scheduled on the Wednesday Pre-Conference day was moved to Thursday overlapping with the two-day event. DogFoodCon reached out to the attendees and explained the conflict. A colleague of mine was confused about some of the event's details. Once he reached the session everything was cleared up and it was a great learning experience for him.

DogFoodCon had 270 submissions for sessions. The last 2 years were around 150 – 180 submissions. That's a huge jump! Definitely makes it difficult for the committee to decide which sessions are approved with so many great submissions.

It was my pleasure to volunteer as a tech blogger for the DogFoodCon conference this year. I interviewed three of the presenters and ended up writing five articles in context to their sessions leading up to the conference.

- Developer Tips: C# Selenium with MSTest Basics

- Azure Multi-Factor Authentication - Part 1: Domain Users

- Azure Multi-Factor Authentication - Part 2: Guest Users

- PowerShell SQL Deployment – Part 1: PowerShell

- PowerShell SQL Deployment – Part 2: Azure DevOps

Thanks to DogFoodCon for sharing these statistics!

Session Categories

First off, I do not envy the organizers in developing a schedule. Presenters have limited availability due to work, life, travel, other conferences, and a multitude of other concerns. Sessions by category need to be spread out across the time slots, so attendees of all different walks of life can find a session in each time slot that interests them. Compromises have to be made. Some overlap happens or the sessions may not happen at all. Of course bowls of M&Ms must be supplied without the brown ones or the presenters start flipping tables. At least I assume that's what they have stored in the presenter's only room. I tip my virtual hat in thanks and appreciation for all the efforts of the organizers to make an event of this size work so well.

DogFoodCon had sessions broken down by categories for the following approximate time slots. I can deduce that the categories definitely helped the organizers setup the schedule well. There's a fairly even distribution amongst the categories across the time slots with just a few outliers. [Modern Workplace] category was for MS Flow which doesn't flow well into any other category (pun intended). Usually, there's more than just one [Front End/UX] session, so I get the outlier here too.

|

Category |

Thursday |

Friday |

Total |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

|

10AM |

11AM |

1PM |

2PM |

3PM |

10AM |

11AM |

1PM |

2PM |

|

|

[AI/ML] |

1 |

|

1 |

1 |

|

1 |

1 |

1 |

|

6 |

|

[AppDev] |

4 |

3 |

4 |

5 |

3 |

1 |

2 |

3 |

2 |

27 |

|

[Azure/Cloud] |

1 |

2 |

1 |

|

1 |

2 |

1 |

|

|

8 |

|

[DevOps] |

|

|

|

|

1 |

1 |

1 |

|

|

3 |

|

[Front End/UX] |

|

|

|

|

|

|

|

1 |

|

1 |

|

[Human Skills] |

|

2 |

|

1 |

2 |

|

1 |

1 |

2 |

9 |

|

[Infrastructure/ Security] |

1 |

|

|

|

|

2 |

2 |

1 |

2 |

8 |

|

[Modern Workplace] |

|

|

|

|

|

|

|

1 |

|

1 |

|

[Office 365] |

1 |

1 |

1 |

1 |

2 |

|

1 |

|

|

7 |

|

[SQL/BI] |

|

1 |

1 |

|

|

1 |

|

|

1 |

4 |

|

Total |

8 |

9 |

8 |

8 |

9 |

8 |

9 |

8 |

7 |

|

Sometimes sessions could be in multiple categories and a decision must be made. [AppDev] had 3 times the number of sessions as the rest. Could this category be broken up?

A colleague of mine mentioned he would have attended some of the sessions I attended if he knew they were more on the Agile, Scrum, and other related project management topics. The sessions on "Example Mapping ", "Software Requirements", and "Iterative Development" may have been categorized as [Project Management] to break up [AppDev] some more and could have garnered his attention.

The session "Usability Starts With Accessibility" could have been in the [Front End/UX] category. The sessions on Vue could have been added to that category as well. I'm sure we could break it up more, but this is enough to understand this point of view.

Breaking up the [AppDev] category into more/other categories could have helped the organizers better plan the schedule. However, with a quick analysis of the sessions with my own makeshift adjustments to the categories the distribution is pretty good given all the other considerations mentioned at the beginning of the article.

My colleague didn't review the session descriptions based on the category name. Perhaps this is just anecdotal. Did you use the categories to determine if you should even read the session description?

Keynote - Day 1 - Keith Elder

Keith delivered a passionate presentation that left me choking back tears. I highly recommend listening to Keith speak especially on the ISMs and his experience at Quicken Loans.

He described how he started at Quicken Loans with the expectation of staying for just a few months. Overtime he came to love the culture based on ISM's and the people until he could throw away his resume because there was nowhere else he wanted to be. The ISM's promote an employee's happiness, stability, and work life balance. Here are just a few of them.

- Numbers and money follow; they do not lead

- Develop your skills so you may create great products and innovations.

- Money will follow.

- Yes before no

- It doesn't mean that every idea will be approved

- Be open to new ideas and give them a chance

- I love the "KNOW" before "NO" attitude

- We'll eat our own dog food

- I thought this ISM was so appropriate given we were at DogFoodCon

- Take advantage of all the opportunities within your company

- If your products are good enough for your clients, then they are good enough for you

Quicken Loans dedicates one week a quarter (every 10 weeks) to "Hack Week" dedicated to innovation and improving business. They treat it like a science fair. Some of these excursions have led to new companies forming. There is plenty to learn from this company's cultural ISMs that every business should incorporate.

[Office 365] Getting Up To Speed With Microsoft Graph Development - Brian T. Jackett

Microsoft Graph is a unified REST API for O365, Windows, Azure AD, and more. You may register your Enterprise applications to work with Microsoft Graph as well. It supports standard Http Verbs: GET | POST | PATCH |PUT | DELETE. The url format follows this format:

/{Resource}/{Entity}/{Property}/{Query}/users/james/events?top=5

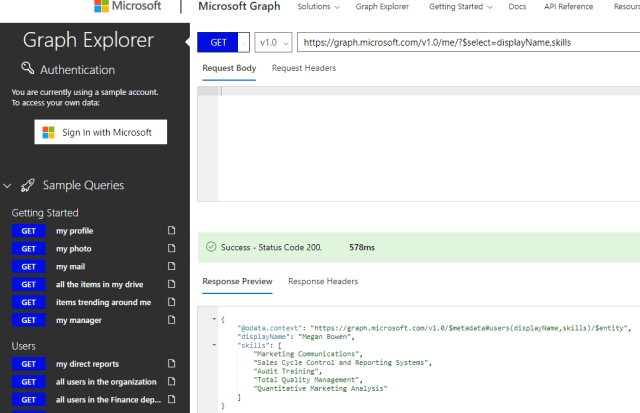

When you login to the Graph Explorer with your MSDN account you'll grant it permission to access a lot of your information. Once logged in you'll have access to plenty of sample queries to learn more about you and your company. Here's an example using the sample account.

Microsoft Graph uses Azure AD Identity. There are two types of permissions: Delegated and Application permissions. Be careful to only grant the appropriate level of permissions for the users and API. As an example and Application Permission follows the format: Resource.Operation.Constraint. If User.Read.All was applied to a user to access email then that user would have access to not just their emails, but everyone's email. OAuth 2.0 authentication is also supported.

Implement the Microsoft.Graph Nuget package in your application to assist making calls to the Microsoft Graph service. The Microsoft.Graph.Auth NuGet package (preview) supports the OAuth 2.0 functionality.

Learn more by reading an Overview of Microsoft Graph.

Brian did a nice job reviewing the topic. He went over the concepts very well for an introductory session. I would have liked to have learned more about adding an Enterprise application to Graph to handle the authentication if it could be done in a few more minutes. I can see it being a follow-up session depending on its complexity. Good job!

[AppDev] Clean Architecture with ASP.NET Core - Steve Smith (Ardalis)

Steve Smith has written an Architecture eBook for Microsoft titled "Architecting Modern Web Applications with ASP.NET Core and Microsoft Azure" available for download for free.

Steve ran through the standard SOLID principles including DRY and New is Glue. He went over previous architectures such as Hexagonal Architecture, Onion Architecture, and N-tier architecture. All of these concepts are integral to understanding Clean Architecture. I liked Uncle Bob's book on Clean Architecture so I already had the basics coming into the session, but weren't necessary as Steve walked us through the concepts of Domain Centric Design.

The Core Project contains your entities, aggregates, DTOs, interfaces, etc. This project should not depend on any of the other projects in the solution. On the other hand the other projects may depend on it. Any objects shared by multiple solutions should be isolated in a Shared Kernel and published/referenced as a NuGet package. From my experience the Shared Kernel should not change often, else you run into what my team calls the NuGet Churn.

Side note on this Anti-Pattern I've dubbed the NuGet Churn. The NuGet Churn occurs when you make a change to a NuGet package and now have to update the NuGet version on many applications dependent on the NuGet package or the application can't speak with some or all of its services. This is usually the result of a monolithic application that has been broken down into micro services or an attempt at following DDD where the dependencies have not yet been inverted. It's a maintenance nightmare that requires redeployment of all apps dependent on the NuGet package and running tests to validate the deployment. There's a longer and more harried experience on this Anti-Pattern that deserves its own article.

The Infrastructure Project contains most of the applications dependencies on external resources such as ORMs like Entity Framework, file access, external API calls, etc. Use dependency injection to inject these instances via constructor injection.

The Web Project, UI, Console, etc. is the entry point into the application. It depends on your IoC container and the rest is injected as necessary.

The Test Projects should correspond with each of the other projects to unit test your projects.

Check out his example Clean Architecture github for more details. I still prefer the Feature Project pattern, but that's another article on its own too.

I have always enjoyed attending Steve Smith's sessions. His presentation style is top notch which is why I consume his blogs, podcasts, and PluralSight videos. Great job as always!

[AppDev] Example Mapping: The New Three Amigos - Thomas Haver

Creating a user story that everyone understands is difficult unless you include the Three Amigos in the process. The Three Amigos represents the perspectives of Business, Development, and Testing. Usually this means one person per perspective though that's flexible the goal is to strike the balance between no collaboration and too much.

Example Mapping builds upon the Three Amigos concept. Get stacks of index cards of four different colors. Yellow for User Stories, Blue for Rules, Green for Examples, and Red for Questions.

Start by defining a User Story using the pattern: As a [] I want [] So That []. Next fill out your Acceptance Criteria as Rules. Create Examples for each rule. Write any questions that nobody in the current group can answer.

- User Story: As a Google User, I want to type my search string and click search so that I will get a list of links matching my search.

- Rule: Search results are empty

- Example: /////////////////////

- Question: Do we just tell the user no results were found or should we provide suggestions?

- Rule: Search results return a list

- Example: Dog Food Con

- A link to DogFoodCon was returned

- Example: Dog Food Con

- Example: /////////////////////

- Rule: Search results are empty

Context is king as was demonstrated in the session by asking us to exchange our examples with another team. We were tasked with trying to come up with additional examples that would work for their rules. Based on their response we had to write up what we thought their rules were. The reverse is easily true that rules may be worded in a way that the developer and tester could come away with a totally different understanding of the rule than the business person. Examples clarify the rules and thus the user story.

Here are a few final takeaways from the presenter. Sometimes features that describe technology updates on UI changes don't fit this model. Though you may be tempted to write examples using the standard Gherkin Given [] When [] Then [] pattern, the examples should be kept simple to illustrate the user stories. Don't just test the examples, test around the scenario as well. Teams may be resistant at first and may change as you make it a habit.

I liked how the session illustrated the concepts in a fun interactive way. There was slight confusion in how much information could be shared. We were asked to write all the examples for a single rule on a card. When asked to make our own examples based on those we did one card of examples to another. The team that did that for us instead put all examples of all rules mixed together which may have helped understand the user story confused what the actual rules were. Clarification on the steps may help the session go smoother, but really this was very minor as I enjoyed the session and took home lessons learned.

[AppDev] Git Merge, Resets, and Branches - Victor Pudelski

This is a session I was looking forward to. I've been using Azure DevOps (formerly TFS) primarily in my work and personal experience. I'm used to the concepts of branching and merging, but not for GitHub.

When I first decided to start creating and publishing articles like my Tips, I wanted them exposed publicly and GitHub fit that bill nicely. My experience to date has simply been to use Visual Studio with the GitHub extension to clone a repository locally, make changes to the articles and code samples, commit changes, sync, and then verify the changes were in GitHub. This was my first time working with GitHub via command line and I was excited!

Victor started with a cloned repository, made a change that he knew would conflict with changes in his master branch, and then used git to add his changes and commit them.

git add --allgit commitgit pull "{repository}"

The merge failed due to conflicts as expected. He opened the file with the conflict and showed us how git changed the file.

- Head

- The start of the conflict

- Changes you made in your local copy of the master repository

- ===

- Changes they made in the master repository

- {hash code}

- The end of the conflict

Resolve the conflict by accepting your changes between Head and ===, === to {hash code}, or manually merging the changes. Remove the Head, ===, and {hash code} lines. Test, code review, and then commit your changes. Pull the code again and merge to the master and it should work now. While you can merge straight to the master, my experience with Azure DevOps has me cautiously pull changes first and resolve conflicts locally before merging.

git reset --hardhard// lose changes, total undomixed// changes in stage reset to working directory but nothing lostsoft// reset to last commit, modified files set to staged (add)

Here's an example of some branching commands.

git checkout -b feat1- This creates a branch

- Recommendation is to use the feature or user story number in the name to reference what the branch was meant for

git branch- Lists the branches

git add --allgit commit -m "comment"git checkout master- Switched the branch

git checkout feat1git branch -d <branch>

Do not delete the repo and reclone. Do not rebase unless you know what you're doing.

There were plenty of commands relayed and additional discussions on branching strategies. I highly recommend reviewing Victor's slides. He did an excellent job at presenting the subject matter and paced through each section well. He answered questions throughout the session and left a great impression on me and others. Well done!

[AppDev] Making Use of New C# Features - Brendan Enrick

Brendan illustrated many new C# 8 features along with some of his favorites introduced in recent versions that aren't as utilized as they could.

- The dreaded Null Reference Exception "Object reference not set to an instance of an object." is despised by all! There are many ways to handle it.

- Null Conditionals

// return user?.Nickname; - Null Coalescing

// return user ?? new User();- Does not stop an index out of range if the first element does not exist

// return this.Children?[0]?Name;

- Null Coalescing Assignment

// num ??= 42 - Nullable Reference Types // string?

- AKA Nullable Annotation Contexts

- Enforce the declaration of nullable types by project or by block of code

- Enable or Disable

- <nullable>enabled</nullable>

- #nullable

// enabled

#nullable

- Output Variables

// if (int.TryParse(rawInput, out int inputInts)) - Discards

// if (int.TryParse(rawInput, out int _)) - Using Declaration Scope

// using var f1 = new FileStream("…"); - String Interpolation

// var fullName = $"{firstName} {lastName}"; - nameof expressions

// var message = $"{nameof(id)} must be set."; - Tuples

// (bool success, int number) SafeParse(string s) => (int.TryParse(s, out int n), n);- Check out my article on Tuples

- Like the Null tips above there were plenty on Tuples

- Pattern Matching – is expressions

// if (shape is Rectangle rec) return rec; - Pattern Matching – switch expressions

// case Square s when s.Side == 0; - Default Interface Methods

Interface IBotCommand{IList<string>Words { get; }

bool ShouldExecute(string word) => Words.Any(w => w == word);Task Execute();}

- Null Conditionals

I skipped some of the features mentioned, but this gives you some good references. I highly recommend researching all of the new features added in C# 8 and the most recent versions to find new tools of the trade that may simplify or enhance your code.

This room was crowded and required additional chairs which were promptly brought in by conference center staff. Several in the crowd noted the session was in demand as one of the only .NET sessions in the time slot. Brendan did an excellent job in presenting. He knew the new C# 8 features well as he's been using them and his other tips extensively for a while. Great session!

Keynote - Day 2 - Paul Kilroy

Paul started the Keynote by running their commercial Huntington Bank – Looking Out for Each Other which also represents how their team members work together. Team members step out of their comfort zone to help customers.

When working with teams resistant to change they reach out to the team to learn and understand their circumstances. Based upon their analysis working with the team they develop an action plan. For one team perhaps they are not familiar with the new technology so they will be given access to training materials and other opportunities to learn and grow. They are more interested in raising skills up which we should all appreciate.

The Keynote took a different tact than most Keynotes I've attended. Paul wrapped up his presentation in the first half of his allotted time. He then opened the panel for questions for the remainder of his time. Below are just a few of the questions and responses.

- Blockchain? Paul put forward that he thinks Blockchain has potential to lower costs. As an example Title searches take time and effort to verify information that can be done automatically by handling it with Blockchain. He did clarify that he was not including cryptocurrency in this discussion.

- Automation? They have been investing in RPA (Robotic Process Automation) to automate repetitive tasks that scales well while providing the agility to adjust as changes come up.

- Migrate or Rewrite software? They follow the strangler pattern to modernize systems. They separate concerns into microservices. They use git for their source control.

- Build or Buy? Huntington prefers to build, not buy. When companies are purchased through acquisitions Huntington has their preferred stack and will import data into their system. If there is a product or feature on the acquiring company, then it is no longer offered to keep the acquisition clean. They may implement the product or feature at a later date upon review, but it will be again done on their stack.

The Q&A format was interesting and provided insight into decisions that plagues many companies. I especially liked the "Migrate or Rewrite" and "Build or Buy" discussions. There were some compelling questions that came off the cuff that fed off earlier answers. The Q&A might have benefitted from some pre-submitted questions as the attendees were caught off guard for the first few minutes. However, the end result was an excellent wealth of knowledge in the inner workings of an enterprise level company that benefited all who attended.

[SQL/BI] Demystifying NoSQL: The SQL developer's guide - Matthew Groves

Matt ran a "choose your own adventure" style presentation preceded by a short history lesson. In contrast to a session I attended a couple years ago that followed a similar format this session went smoother. There was a short history lesson intending to relate that NoSQL (Not Only SQL) concepts were avoided for a long time due to concerns with hard drive space and performance. Most of those concerns are no longer valid with hardware improvements over the years.

My biggest issue with this format is the feeling that we may not have chosen the best path. What did we miss? Was there something important in one of the other paths? Overall, I felt like I got what I needed from the intro level session. While there were a couple advanced scenarios I think most followed along well enough.

Relational databases are not a one size fits all solution and NoSQL solutions address some of those areas. One situation is impedance mismatch where the objects representing the data do not match the normalized database table structure.

NoSQL document oriented stores can address this scenario in one fashion by representing the data as XML or JSON in a column allowing an ORM like Entity Framework to perform the mapping or use ADO.NET to read the data and use serialization/deserialization techniques to read the data.

NoSQL graph databases represents data based on nodes, edges, and properties.

NoSQL wide column stores use tables, rows, and columns. However, the names and columns may change per row in the same table more like a key-value pair.

Instead of listing all of the varied NoSQL variations I'll refer a site that lists all of the NoSQL database systems for your reference with a description and a link.

Once your hands aren't strapped to the Relational Database model new options and challenges start to arise. See my article on XML Shredding for a taste of one challenge you could face with a document oriented store. Granted some of these challenges are addressed by using tools available from Couchbase and other vendors by using declarative queries. I found this article had an entertaining way to describe the difference between imperative vs declarative query languages.

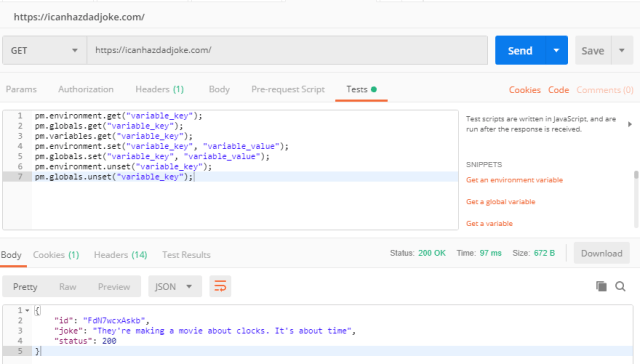

[AppDev] Supercharge Your API Testing using Postman - Umang Nahata

Umang appealed to my passion for telling dad jokes available through https://icanhazdadjoke.com/api. Each time he tested the API through Postman a new joke graced the screen to my delight. Ex. "They're making a movie about clocks. It's about time". Classic!

The steps/checklist to build your API tests:

- Gather details about API: url, documentation, examples

- Open Postman and get started

- Optional: test tab, test runner

Quick tips

- Postman offers many features, so Umang walked us through navigating Postman

- Postman can open web pages by setting the request url to the web page url

- View > show Postman console

- Tests tab – Test examples (snippets) on the right

- Tests are run after a response has been received

- Ex. Pm.test("Status code in 200", function()) console.log

Create an environment for DEV, QA, PROD, etc. Each environment usually contains the same list of variables with different values for each environment.

Import settings, collections, environments, headers, and swagger.

- Import swagger by clicking Import > Paste Raw Text > Paste the JSON > click import > a collection is created matching the swagger input

- Runs tests for selected environment

- Tests are run sequentially in the order they appear in the collection

- Build workflows to run the tests in the order you want

- Chaining requests are done by running scripts before/after you receive a response from the server

- Random data function

- environment.set("RandomMonth", _.random(1,12));

I'm not a big fan of using random data in my tests. They can be powerful when done right. I just haven't seen it done right in my experience. If I write a test I expect it to pass with the data I provided. If I use random data and I don't filter it properly I may get unexpected results that return false negatives. That is not something I want to deal with during any deployment especially for a smoke test on a production deployment. I prefer stable tests so I avoid the random.

Discussion on Room Requirements (wide vs. long)

Umang's presentation drew in a crowd requiring additional chairs to be brought in within the first 5 minutes of the session. Unfortunately, the Postman screen had a lot going on so the font size was small. I was near the back of the room and I could not read much of what was on the screen. It was fine for those in the front half. Others left due to this issue and some of us shifted closer.

This session would have been better in a wider room than the long room it was held. Umang took this in stride and accommodated by zooming in, more frequently by the end when it became more of a habit. I think this issue can be mitigated in the future by improving communications between the speakers and coordinators regarding room requirements.

Coordinators could ask if the presentation will use an IDE with small fonts like Postman, Visual Studio, etc. or perform a live demo. If yes, then ask if they would prefer a wider room so attendees will be closer to the screen. If a wider room is not available, then remind the presenter to zoom in frequently on anything important. The presenter of course should keep this in mind and let coordinators know up front. Of course this is all 20/20 hindsight and it’s a team effort on both sides to get this right.

[AppDev] Iterative Development: The Paper Airplanes Game - Natalie Lukac and David Knighton

The Paper Airplanes Game is a fun interactive game presented by the team at https://scrumdorks.com/. We played a variation of the Paper Plane Game – Practitioners Agile Guidebook. Teams of 3-4 were formed. Following a simplified Agile process each team went through 3 iterations. Each team member was only allowed to make a single fold before passing it to the next "developer". We only counted the number of planes that passed the test and ignored work in progress planes and those that failed. To pass the test the plane had to fly about 10 feet marked out on the floor with tape.

My team consisted of 3 people and for some reason we named ourselves after the Princess Bride characters. My Name Is Inigo Montoya! Classic!

Iteration 1:

- 3 minutes – Design/Planning

- As a team we discussed different paper airplane designs

- We settled on the standard Dart design to have as few folds as possible

- We decided that we would perform an assembly line and as each of us finished a plane we would test the plane

- We estimated we could have 10 successful planes

- 5 minutes – Build/Test/Deploy

- We tried to cut out a couple of the folds, but it caused the planes to crash and burn (still not sure how we managed that :P), so we adjusted midway to the standard dart folds

- Being a little crowded, some of our planes veered into developers on other teams so we called interference and tried again

- We completed/deployed 11 successful planes

- 2 minutes – Retrospective

- Each developer stopping the assembly line to run a test slowed production significantly

- The adjustments mid sprint by adding all folds produced a better quality product

Iteration 2:

- 3 minutes – Design/Planning

- We decided to designate one developer as the QA tester who would break off the assembly line once a minute to perform the tests

- While the QA person was testing the 2 remaining developers would pass the planes back and forth to each other

- We estimated we could have 18 successful planes

- 5 minutes – Build/Test/Deploy

- The assembly line worked mostly as expected, but we would sometimes get stuck waiting for each other

- In the middle of the sprint when we found ourselves waiting we would start a new plane and instead of feeding the planes to each other we moved the planes into a backlog pile

- We completed/deployed 23 successful planes

- 2 minutes – Retrospective

- We liked the concept of the backlog pile

Iteration 3:

- 3 minutes – Design/Planning

- We decided to incorporate the backlog piles

- The QA person would feed his planes into whichever backlog was lowest and would pull from the highest to help balance

- We estimated we could have 25 successful planes

- 5 minutes – Build/Test/Deploy

- The assembly worked mostly as expected and the backlogs worked well

- Unfortunately, we were getting tired at this point and fell into old habits of performing folds in a different order

- This caused bugs in the software to form as the next developer was expecting the code, I mean folds, in a specific stage/pattern

- This in turn caused delays to determine the next fold or we introduced a defect (technical debt)

- The DEV/QA person identified the defect before flying the plane and fixed the bug in some cases

- Even with the defects introduced, the improved process ensured that most of the planes were of good quality

- We completed/deployed 25 successful planes

- 2 minutes – Retrospective

- We resolved to review the order of the folds to make the process smoother going forward

- Get some rest

The other teams weren't as successful. Some of their issues were as follows.

- They struggled to develop a quality product that could pass the test

- They didn't form an assembly line and went sequentially

- Each team member kept performing every role like we did in the first iteration

I really enjoyed this session and wish more attendees had joined in the fun. This exercise illustrated many of the benefits of iterative development: Design/Planning > Build/Test/Deploy > Retrospective > Repeat. Our team embraced the concept and building upon prior experiences learned and improved greatly from the beginning of the session up to the end. While the other teams struggled in some aspects they too improved by the end. I highly recommend participating in the Paper Airplanes Game as an entertaining way to learn iterative development.

[AppDev] WebAssembly Live! - Mike Hand

Code is written in a language such as C, C++, RUST, etc, compiled to WebAssembly (WASM), and then Javascript calls the WebAssembly. There's a performance gain using WASM because it's compiled code. Javascript is Just-In-Time (JIT) has a performance hit.

Dynamic types are not optimized such as a field supporting int and string. WebAssembly cannot touch the DOM or anything visible on the screen. Blazer depends on WASM.

Mike recommended installing emscripten and demonstrated using C++ code. There are other ways to build WASM files for other languages. In his C++ file (.cpp) he created a simple Module class with the method addTwoNumbers.

em++ demo.cpp -o demo.html

The command created the files demo.html, demo.js, and demo.wasm. There's loads of generated code in these files. Most of the javascript handled shims to make the code work between browsers. Depending on your usage, some of it can be removed. Mike was able to remove all but a few lines for the demo as noted shortly. Understand what the generated code does before trying to optimize by removing any code.

Mike added a kind reminder that you need to be aware of CORS and how to work with it.

emrun --port 8080python -m SimpleHttpServer 8080Not sure I wrote this right

The python statement was required because Mike was getting errors about not finding the browser, because em did not think the browser existed.

Delete everything but the body and the <script…> tag from the html file. Only about 20 lines out of the demo.js were necessary.

Emscriptem must be told to expose the methods publicly.

em++ demo.cpp -s EXPORT_ALL=1 -o demo.js

Open the html in a browser. In the javascript console you can call the WASM.

__Z13addTwoNumbersii

C++ name mangling causes this naming convention. It could come out differently each time you compile. Resolve by updating the C++ code with the following. C# and many other languages handle this with namespaces.

extern "C" { /* C++ code */ }

Now call the method in the javascript console.

addTwoNumbers = Module._addTwoNumbers

Passing invalid types will get unexpected results as javascript will try to adjust the values to meet the parameter types.

em++ demo.cpp -s EXPORTED_FUNCTIONS = "[_main, _addTwoNumbers]" -o demo.js

The square brackets will limit the exposed functions to the comma delimited list of methods.

WebAssembly is still cumbersome at this time, because browsers haven't standardized on implementation yet, though it's in the works.

Mike's presentation went fairly smoothly even though it involved a live demo throughout. I thought the pacing was nice and worked out well. Good job Mike!

Conclusion

2019 had another great DogFoodCon conference in the books. It was my pleasure to interview several presenters and write articles on their session's topic. I enjoyed, learned, and reinforced my understanding on many concepts. I can't thank DogFoodCon enough for the opportunity to volunteer as their tech blogger and answering all of my questions without hesitation.

Did you attend DogFoodCon this year? What was your favorite session? Did this review help you as a presenter or attendee? If you've never been to DogFoodCon before are you interested now? Tell us about your experiences in the comments below, on Twitter #DogFoodCon or LinkedIn!