The Two Tools I Use To Find Broken Links On My Site

So how many broken links (404s) do you have on your site? Today, I talk about the two utilities I use for finding broken links on your site.

One of the basic functions of the web is to make sure you don't have any broken links in your site. A broken link can mean a bad experience for your users.

There are a number of online utilities to help out with this tedious, but important task. I want to go over two of them with you today.

If you have a huge site, how can you afford to go through every single page to find broken links? It can be a daunting task.

That's why I'm covering my favorite tools today in order to make your site a little better in the long run.

Xenu Link Sleuth

Xenu Link Sleuth is a downloadable client and runs pretty darn quick for a small utility. I ran this utility and it took a total of a minute-and-a-half to scan my entire site.

It has a very easy interface to find out if your site has any internal or external broken links. Everything is in a List view and is very easy to navigate from link to link.

After Xenu is done spidering your site, it asks if you could like the report sent to an FTP site or you can just press OK and have the report display in a browser to view later.

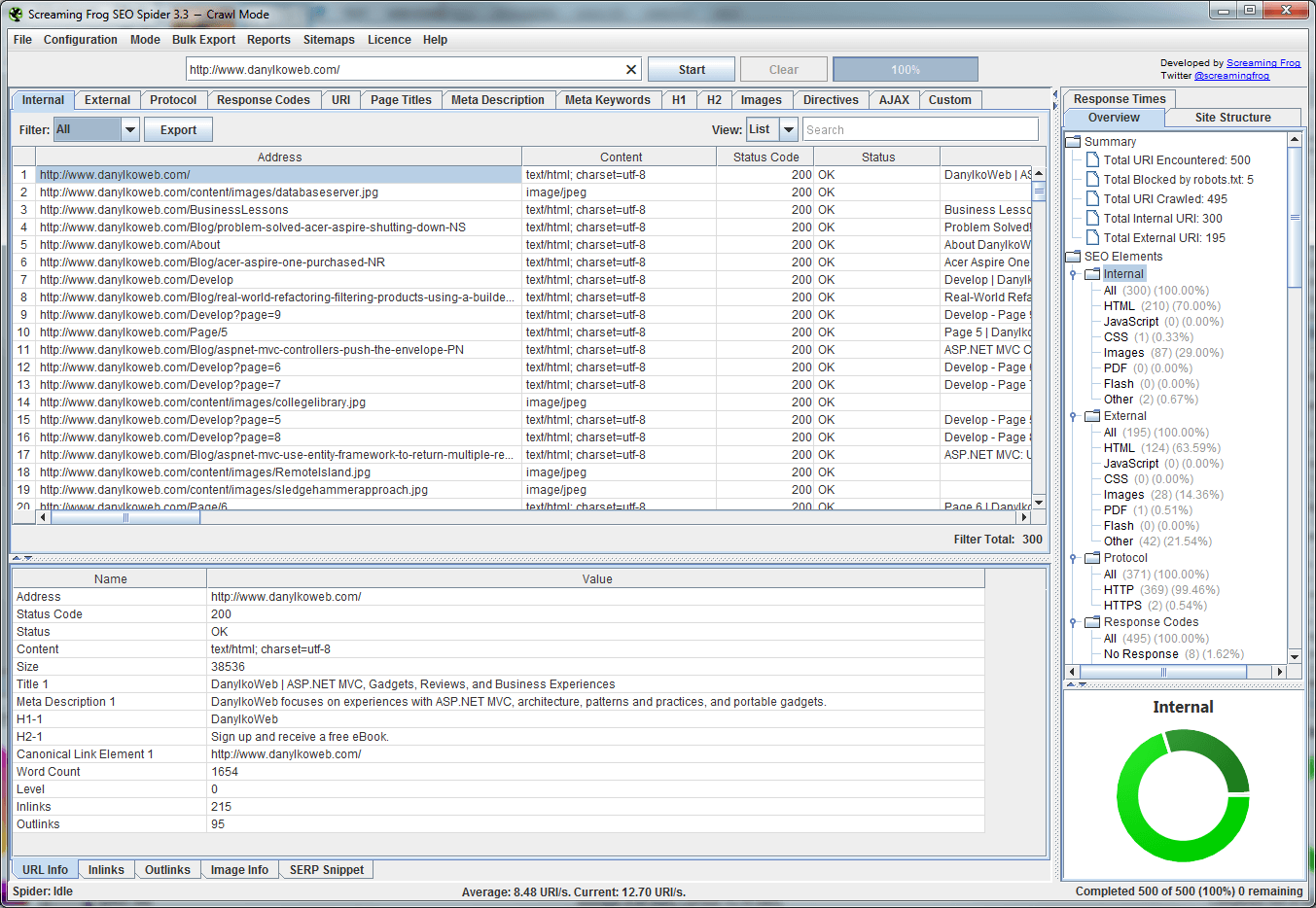

Screaming Frog - SEO Spider

Since this is a freebie up to 500 links, this satisfied my curiosity regarding additional data about my site. Along with checking for broken links, Screaming Frog can detect (taken from their site):

- Errors – Client errors such as broken links & server errors (No responses, 4XX, 5XX).

- Redirects – Permanent or temporary redirects (3XX responses).

- External Links – All external links and their status codes.

- Protocol – Whether the URLs are secure (HTTPS) or insecure (HTTP).

- URI Issues – Non ASCII characters, underscores, uppercase characters, parameters, or long URLs.

- Duplicate Pages – Hash value / MD5checksums algorithmic check for exact duplicate pages.

- Page Titles – Missing, duplicate, over 65 characters, short, pixel width truncation, same as h1, or multiple.

- Meta Description – Missing, duplicate, over 156 characters, short, pixel width truncation or multiple.

- Meta Keywords – Mainly for reference, as they are not used by Google, Bing or Yahoo.

- File Size – Size of URLs & images.

- Response Time.

- Last-Modified Header.

- Page Depth Level.

- Word Count.

- H1 – Missing, duplicate, over 70 characters, multiple.

- H2 – Missing, duplicate, over 70 characters, multiple.

- Meta Robots – Index, noindex, follow, nofollow, noarchive, nosnippet, noodp, noydir etc.

- Meta Refresh – Including target page and time delay.

- Canonical link element & canonical HTTP headers.

- X-Robots-Tag.

- rel=“next” and rel=“prev”.

- AJAX – The SEO Spider obeys Google’s AJAX Crawling Scheme.

- Inlinks – All pages linking to a URI.

- Outlinks – All pages a URI links out to.

- Anchor Text – All link text. Alt text from images with links.

- Follow & Nofollow – At page and link level (true/false).

- Images – All URIs with the image link & all images from a given page. Images over 100kb, missing alt text, alt text over 100 characters.

- User-Agent Switcher – Crawl as Googlebot, Bingbot, Yahoo! Slurp, mobile user-agents or your own custom UA.

- Redirect Chains – Discover redirect chains and loops.

- Custom Source Code Search – The SEO Spider allows you to find anything you want in the source code of a website! Whether that’s Google Analytics code, specific text, or code etc. (Please note – This is not a data extraction or scraping feature yet.)

- and yes, an XML Sitemap Generator – You can create an XML sitemap and an image sitemap using the SEO spider.

Phew! This does a heck of a lot for a free product.

If you want to purchase it and remove the 500 URL limit, it's £99 ($150 US) for a year subscription.

Conclusion

I know there are more out there, but to be honest, I haven't found any other sensational tools.

These two utilities have saved me countless hours and work just as good as any commercial products.

Have I missed any? Post your comments below.